A Monolith to Microservices Cloud Journey - Musings of the Advanced Developing on AWS Course

What is “Advanced Developing on AWS” and what to expect?

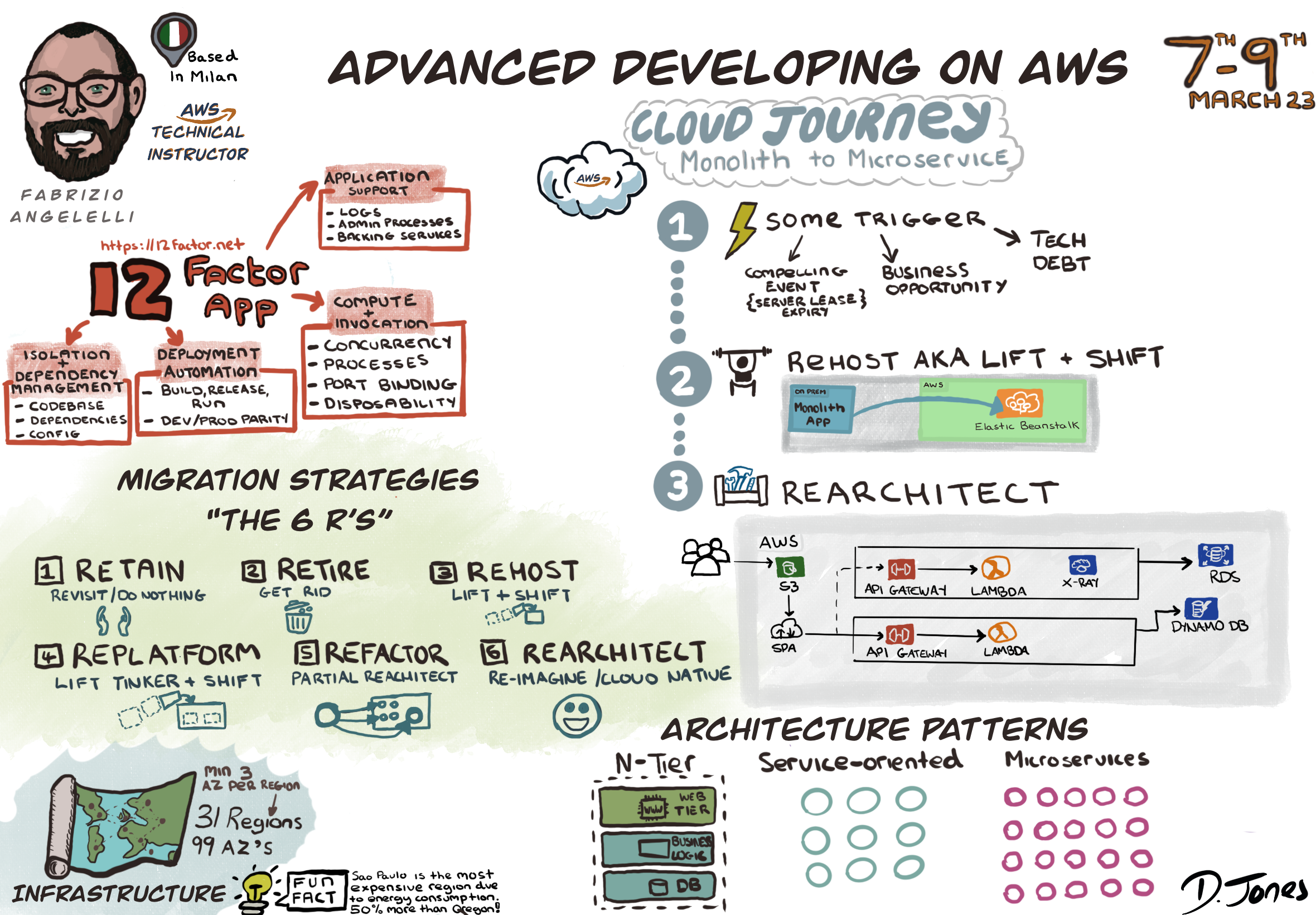

I recently completed the AWS training course “Advanced Developing on AWS,” which can be attended virtually or in person. I opted for the online delivery method, which spanned three days between March 9th and March 11th, 2023. A typical day on the course would start around 8 AM (GMT) and aim to finish around 4 PM with roughly three 30-minute breaks throughout the day to accommodate the audience in different time zones. Each day involved a mixture of classroom theory delivered through a PowerPoint-style presentation and lab sessions. The course was hosted by Fabrizio Angelelli, who has been delivering AWS courses as a Technical instructor since 2016.

On the first day, Fabrizio invited each attendee to introduce themselves, providing details on their current role in the business, level of AWS experience, technologies and languages familiar with, and something fun like what hobbies they like doing outside of work. I thought this was a great icebreaker to introduce the group and get a baseline of the AWS experience attending the training. After the introductions, we were given more context on what the course would attempt to teach us and how it would be broken down in terms of content delivery.

The course was designed to:

”Guide engineers in deciding the best practices for migrating an on-premise monolithic application into a cloud-native microservices architecture while applying the 12-factor app methodology”

So let’s get into it and deep dive into the topics that were covered and share my experiences along the way on the Advanced Developing on AWS course.

A Monolith to Microservices Cloud Journey

Cloud Journey

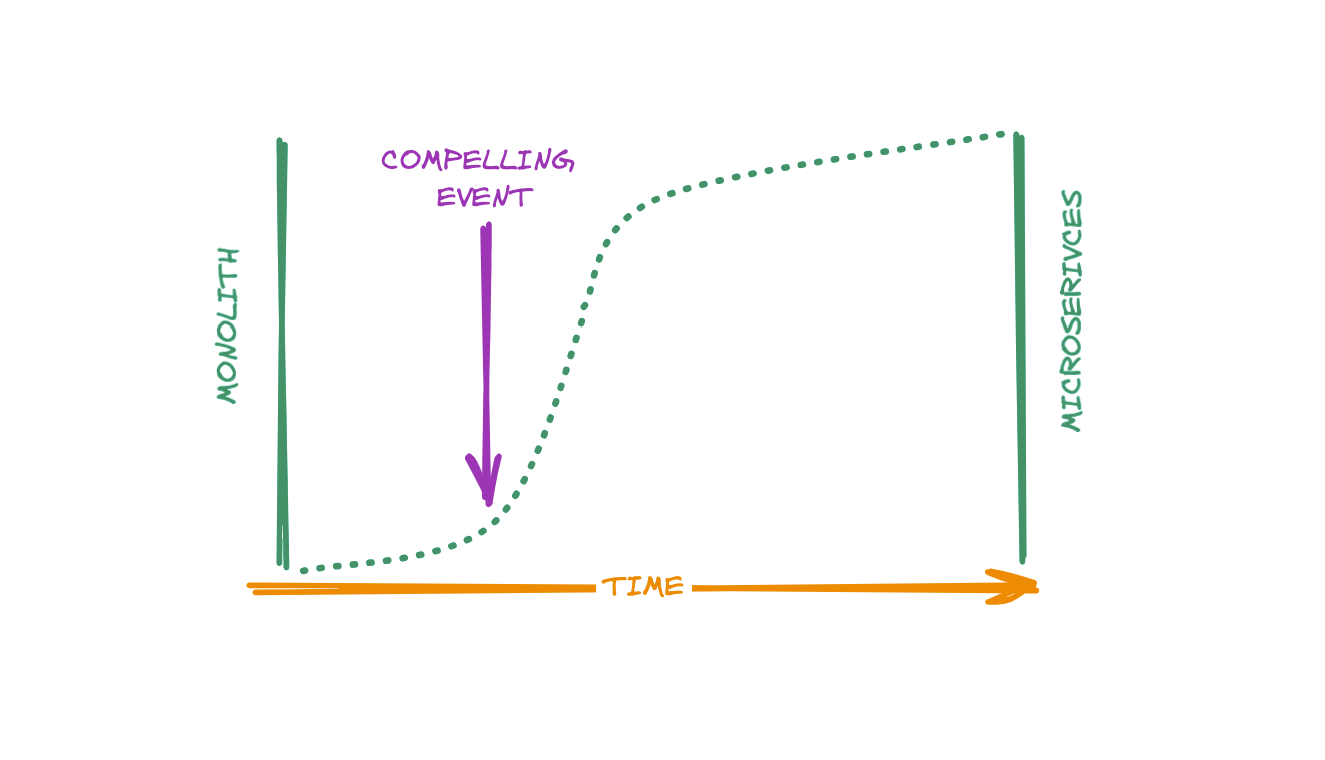

You might ask yourself what is a cloud journey and why would an organisation embark on such a quest. Well to summarise a cloud journey is the process of migrating IT infrastructure that’s based on-premise to cloud-based service such as AWS. The most common trigger for starting a cloud journey is some sort of compelling event. Compelling events can include but are not limited to:

- Datacenter lease expiry - logical point in time to migrate

- Technical debt - spiralled out of control with no or limited control over the infrastructure and cost

- Business opportunities such as application rewrites (typical 2.0 versions) or business investment (cash injection)

Benefits of migrating to the cloud

Migrating legacy on-premise infrastructure and applications to the cloud offers several benefits such as increased flexibility, scalability, and reliability. Cloud-based applications can be easily scaled up or down based on demand with the addition of cloud providers offering reliable infrastructure with high availability and disaster recovery capabilities. Other benefits of migrating to the cloud include:

- Reduced operational costs

- Transparent pricing models

- Secure by default without worrying about encryption and identity access management

Choosing a cloud provider

There are numerous cloud providers available in the market, including prominent names like Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and IBM Cloud, among others. However, since this course specifically focuses on a cloud journey with a primary emphasis on AWS, we will delve into the infrastructure and explore the multitude of benefits it offers for organisations embarking on a cloud transformation.

Using AWS as a cloud provider offers several advantages:

- Extensive Geographic Coverage: AWS has a vast global infrastructure with 31 regions across the world. This geolocation capability allows businesses to deploy their resources closer to their target audience, reducing latency and improving performance.

- High Availability: AWS ensures high availability through its 99 availability zones (AZs). These availability zones are essentially data centers strategically located within each region. Having at least three AZs in each region enhances fault tolerance and enables businesses to design resilient and highly available architectures.

- Service Availability: AWS offers a wide range of services, divided into regional and global categories. Regional services are available in specific regions, while global services like Route 53 (AWS’s DNS service) and S3 (Simple Storage Service) are accessible across all regions. This flexibility allows businesses to select the services that best fit their requirements and expand their operations globally over time.

- Continuous Service Expansion: AWS continually introduces new services and features to enhance its offerings. While some services are initially launched in specific regions, they gradually roll out to other regions, providing users with access to cutting-edge technologies and innovations.

By leveraging AWS’s extensive regional coverage, high availability, diverse service options, and continuous expansion, businesses can benefit from a robust and scalable cloud infrastructure to meet their specific needs.

Fun fact: Sao Paulo, one of the AWS regions, stands out as the most expensive region due to higher energy consumption and prices. On average, it costs approximately 50% more than the Oregon region. This fact may be useful for businesses considering cost optimization and budget planning when selecting their AWS region.

The monolithic problem

In the course, we discussed a monolithic scenario that involved a travel platform catering to flight ticket purchases and hotel reservations. This platform called CloudAir revolved around a single, integrated application and a monolithic database, both hosted on-premises.

Upon assessment, it was evident that the platform faced significant challenges in terms of scalability and cost efficiency. As demand increased, the existing infrastructure required the addition of new server racks, resulting in higher expenses. Moreover, the release cycles were arduous and time-consuming, requiring extensive manual intervention for deployment. The application also suffered from:

- Tight coupling

- Issues with separation of concerns

- A high amount of technical debt

- Performance issues

- Data tier complexities

With the problems identified, it was time to set out some requirements, choose the appropriate architectural pattern and approach to migrating the existing platform to the cloud

Requirements and architecture patterns

Let’s start with the requirements and these were pretty simple:

- Go faster

- Improve stability

- Increase quality

- Improved release cycles

- And finally, save money!

The course covered various architectural patterns such as N-tier, service-oriented architecture (SOA), microservices, model-view-controller (MVC), pub-sub and CQRS. We are not going to cover all these patterns in detail in this blog but focus on microservice architecture since that was the aim of the course “A Monolith to Microservices Cloud Journey”. If you want to learn more about the other patterns I suggest you check out Redhats blog which does a solid job of explaining each one but for now let’s delve into the microservice pattern.

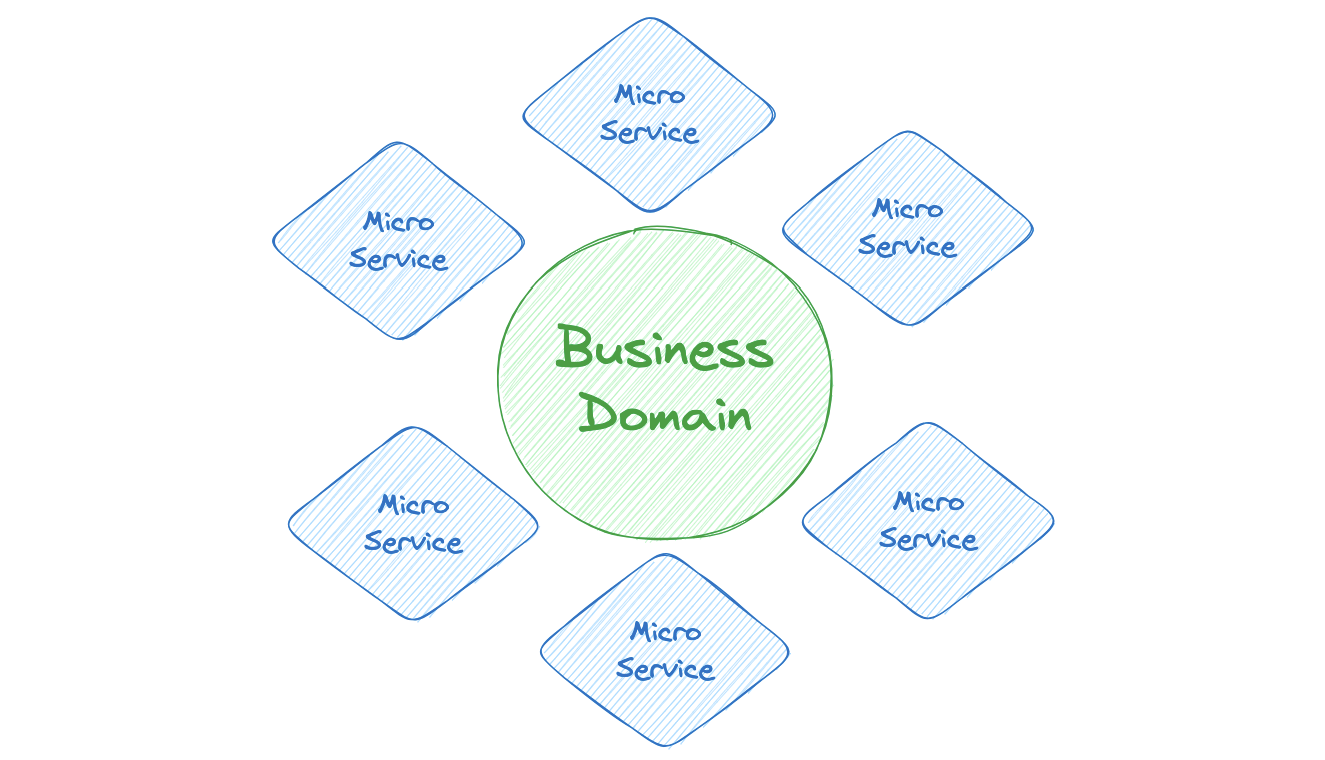

So what is microservice architecture?

Microservice architecture is a design pattern that involves decomposing applications into smaller, autonomous services that collaborate to fulfil the overall functionality. This architectural approach offers numerous advantages, including enhanced agility, scalability, and resilience. Each service operates independently, focusing on a specific business capability or function, and communicates with other services through well-defined interfaces. This loose coupling allows for individual services to be developed, deployed, and scaled independently, facilitating faster iterations and adaptability. By embracing microservices, organisations can build flexible and modular systems that can scale efficiently and withstand failures in a more resilient manner.

Why choose a microservice architecture?

To answer this let’s look at how microservice architecture is adopted in AWS offerings and review the requirements.

- Go faster: AWS Serverless services, such as AWS Lambda, allowing developers to focus on writing code without the need to provision or manage servers. This enables faster development and deployment cycles since developers can quickly iterate on their code and bring new features to market more rapidly.

- Improve stability: AWS Serverless services handle the operational aspects, including scaling, patching, and monitoring, reducing the burden on developers. The managed nature of these services helps improve overall system stability by leveraging AWS’s expertise and infrastructure, ensuring high availability and reliability.

- Increase quality: Serverless architecture promotes the use of small, focused functions or services. This modular approach enhances code maintainability, testability, and reusability. Additionally, the managed nature of serverless services allows developers to focus on writing high-quality code, conducting thorough testing, and implementing best practices.

- Improved release cycles: Serverless architecture enables faster and more frequent release cycles due to its decoupled and independent nature. Developers can deploy and update individual functions or services independently, avoiding the need for extensive coordination or affecting the entire application. This allows for more efficient and rapid deployment of new features and bug fixes.

- Save money: AWS Serverless services follow a pay-per-use pricing model, where you are billed only for the actual usage of your functions or services. This granular pricing approach can result in cost savings, especially for applications with varying or unpredictable workloads. By automatically scaling resources based on demand, you can optimise costs and avoid over-provisioning.

We will discuss the proposed solution a little bit later but you might have noticed a theme here that implies AWS Serverless technologies look like an ideal fit for building microservice architecture whilst covering the requirements we set out as part of the migration.

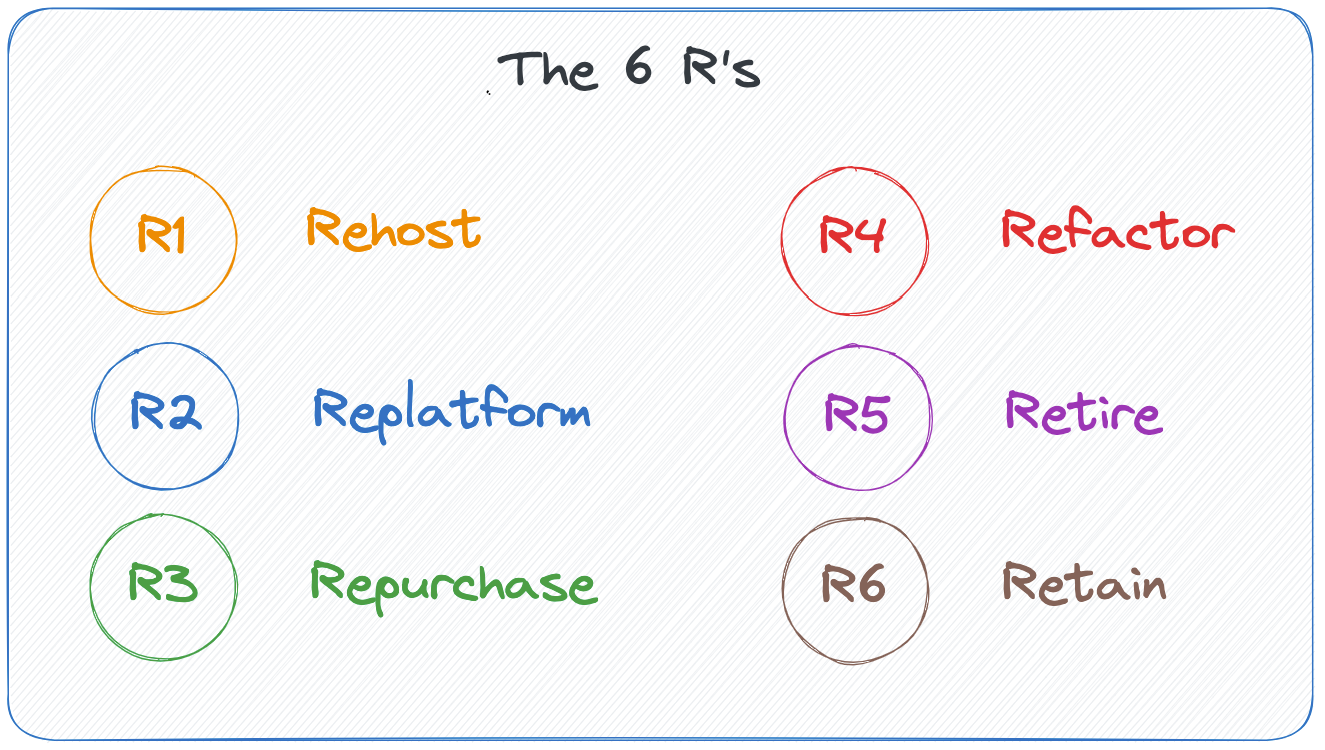

Approaches to migration

Migrating to the cloud demands careful consideration and planning. AWS recommends the “6 R’s of migration” to help businesses choose the right strategy for a successful transition. These strategies include:

- Rehost (lift and shift): Replicating applications in the cloud with minimal changes to the existing infrastructure.

- Replatform (lift, tinker, and shift): Making certain modifications to leverage cloud services and improve performance or cost efficiency.

- Repurchase (drop and shop): Replacing existing applications with ready-to-use software-as-a-service (SaaS) solutions.

- Refactor (re-architect): Redesigning and rebuilding applications using cloud-native technologies to fully leverage cloud benefits.

- Retire: Decommissioning applications or workloads that are no longer necessary.

- Retain (on-premises): Opting to keep certain applications or workloads on-premises rather than migrating them to the cloud.

By understanding and applying these strategies, businesses can make informed decisions about their cloud migration approach.

The Twelve-Factor app

The Twelve-Factor App is a methodology and set of best practices for building modern, cloud-native applications. Here’s a brief summary of the Twelve-Factor App principles:

- Codebase: Keep the codebase in version control and have a single codebase per application.

- Dependencies: Explicitly declare and isolate dependencies. Use package managers to manage dependencies.

- Config: Store configuration in the environment, separate from the codebase. Avoid hard-coding configuration values.

- Backing Services: Treat backing services (databases, caches, etc.) as attached resources and access them via well-defined APIs.

- Build, Release, Run: Strictly separate build, release, and run stages. Build artifacts should be immutable and promote reproducibility.

- Processes: Run the application as one or more stateless processes. Scale horizontally by adding more processes.

- Port Binding: Export services via port binding. The application should be self-contained and able to run on any available port.

- Concurrency: Scale out via the process model, allowing multiple processes to run simultaneously.

- Disposability: Maximise robustness with fast startup and graceful shutdown. Aim for stateless processes and stateful components.

- Dev/Prod Parity: Keep development, staging, and production environments as similar as possible to minimise discrepancies.

- Logs: Treat logs as event streams and capture them in a centralised location. Use proper tools for log aggregation and analysis.

- Admin Processes: Run administrative and management tasks as one-off processes. Avoid embedding them in the application code.

By following the Twelve-Factor App principles, developers can create applications that are scalable, portable, and resilient in the cloud and distributed environments. If you are interested to find out more about the Twelve-Factor app principles check out 12factor.net

Other Considerations

DevOps

DevOps emphasises the collaboration between development and operations teams to enhance agility in software development and deployment. It involves automating application releases, following the principles of the Twelve-Factor App, such as the “build, release, run” approach.

Continuous Integration/Continuous Deployment (CI/CD) enables confident and frequent releases to various environments.

To maintain security, secrets should be regularly rotated and securely stored using services like Secrets Manager or SSM. By implementing these practices, organisations can achieve faster, more reliable, and more secure software delivery.

The course covered AWS services such as CodePipeline, CodeCommit, CodeBuild, CodeDeploy, CodeStar, CloudFormation, CloudWatch and X-Ray which support DevOps agility.

Polyglot persistence

Polyglot persistence is an architectural approach to data storage that suggests using multiple databases or data storage technologies that are optimised for specific use cases or data types. The idea behind polyglot persistence is to use the best tool for the job, rather than trying to force all data into a single database.

The term “polyglot” refers to the ability to speak multiple languages, and in this context, it means that different types of data can be stored in different types of databases or data stores, depending on their unique characteristics.

Decentralisation is a key principle of polyglot persistence. Each service in the architecture should have ownership of its own data and data store, enabling independent scaling and schema changes without impacting other services.

The course covered AWS services such as RDS, Redshift, DynamoDB, S3 and ElastCache which support polyglot persistence.

How to migrate a monolith to a microservice architecture in AWS

Now we understand what’s required to embark on a cloud journey, let’s talk about how we migrated the CloudAir platform in the course and what approach we took to achieve the proposed final solution.

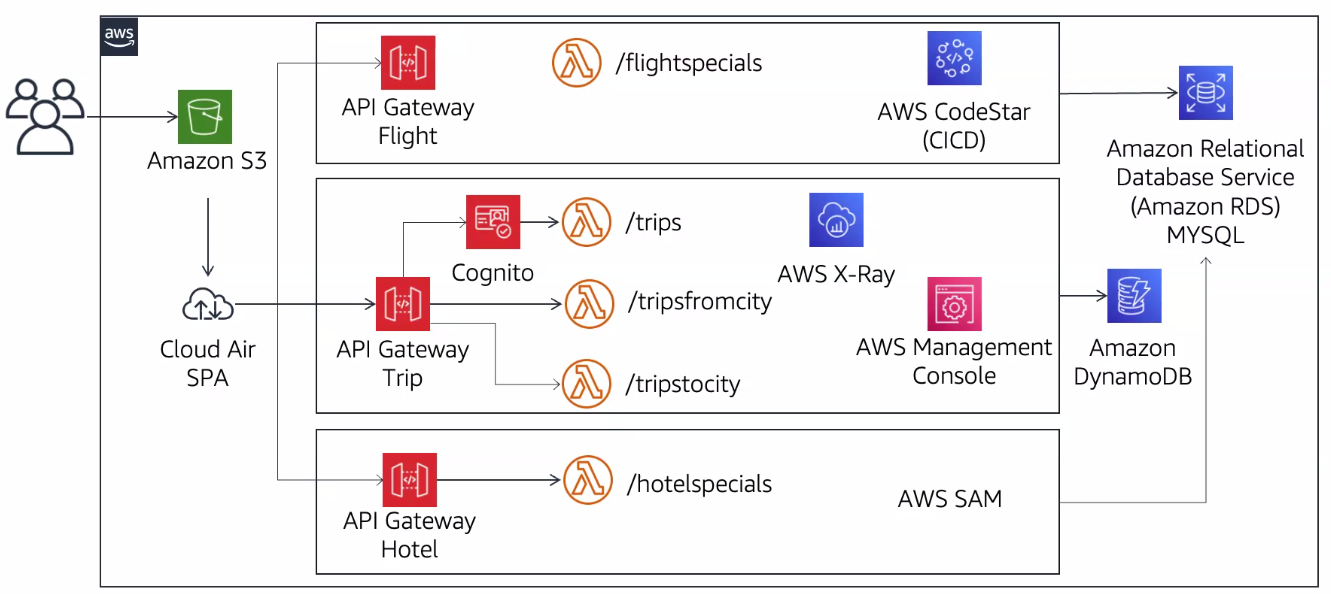

Proposed Solution

Diagram source: AWS

Phases of migration

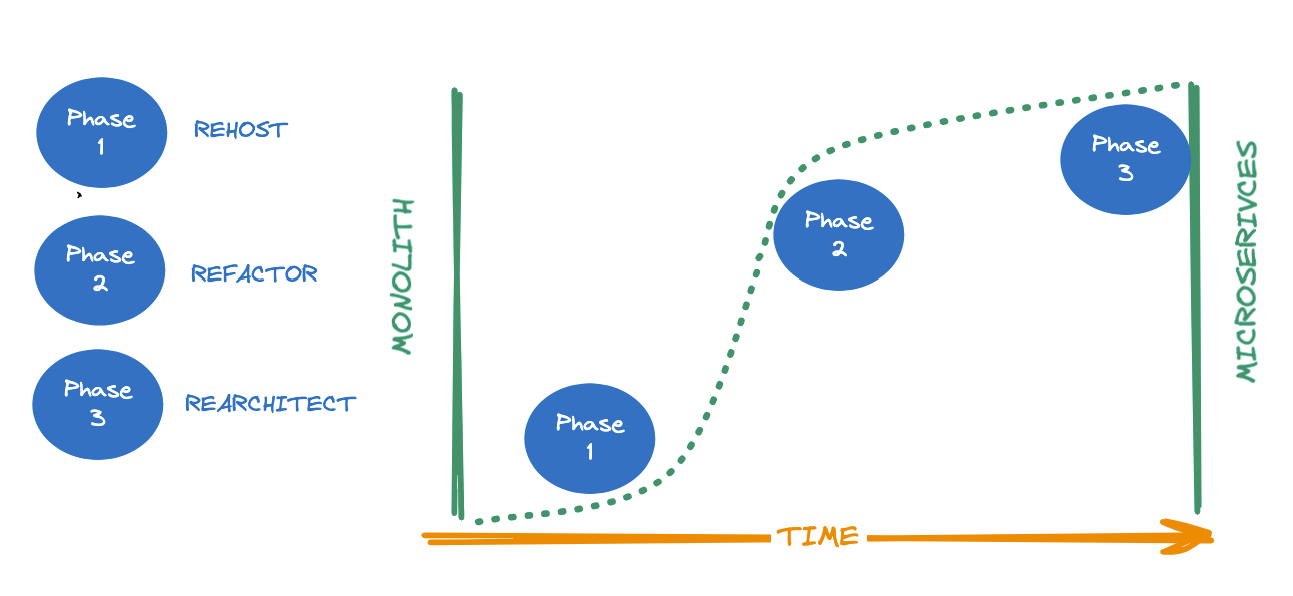

The migration was broken into phases to ensure the application was migrated to the cloud as quickly as possible allowing the decommission of the on-premise infrastructure and allow the redirection of traffic to the cloud-native solution. To achieve the end goal a phased migration approach was used:

Phase 1

The CloudAir web application underwent a re-hosting process by migrating it to the web application and database into AWS. This involved a manual process of packaging the source code and uploading it to AWS Beanstalk. As the original database was SQL-based, it was migrated to RDS MySql. Due to the time constraints of the course, test data was manually inserted into the new RDS database. Additionally, configuration adjustments were made to enable communication between the Elastic Beanstalk instance and the RDS DB instance. With minimal effort, the platform was successfully re-hosted and became production-ready, allowing for decommissioning of the on-premise infrastructure.

Phase 2

During this refactor phase, the application underwent a transformation based on the Domain-Driven Design (DDD) software development methodology. DDD focuses on modelling software according to the business domain, fostering shared understanding between stakeholders and developers. It organises complex systems into manageable contexts using concepts like aggregates, domain events, value objects, and domain services.

In line with DDD principles, several microservices were identified and extracted from the monolithic codebase. These included a Flight Service, a Hotel Service, and a Trip Search Service. Each microservice was developed using API Gateway, Lambda functions, and DynamoDB as its data store. The code responsible for each domain was moved to its own repository and deployed using CI/CD processes, this ensured independent deployment and scalability.

In order for the web application to consume the microservices the appropriate API endpoints were updated to communicate with the new microservices. This approach allowed for greater flexibility, modularity, and maintainability of the application, enabling future enhancements and scalability.

Phase 3

The final phase was to rearchitect the platform in AWS. This consisted of:

- The web application was transformed into a single-page application and hosted on S3 for efficient delivery and improved user experience.

- The Trip microservice lambda underwent architectural enhancements. Instead of one lambda handling all trip-related endpoints, it was decomposed into multiple granular functions. Each endpoint now has its own dedicated lambda, ensuring a clear separation of concerns. This enables independent deployment, observability, and the ability to scale each endpoint individually.

- The API gateways for each microservice were configured with appropriate throttling, quota management, and DDoS protection to ensure optimal performance and security.

- A secure login process was implemented using Cognito. Bearer tokens were enforced to authenticate and authorize API requests, enhancing the overall security of the application.

- Full deployments were automated using CodeStar, streamlining the deployment process and ensuring consistency across environments.

- X-Ray tracing was enabled throughout the platform to improve observability. This allows for better understanding and troubleshooting of application performance, identifying bottlenecks, and optimising system efficiency. The migration was complete we had put into practice how to migrate a monolith on-premise solution to a cloud-native microservices-based architecture utilising serverless services AWS.

Final thoughts

Ok let’s wrap things up, we discussed my experience attending the “Advanced Developing on AWS” training course, focusing on migrating a monolithic application to a microservices architecture using AWS services. Covering the benefits of migrating to the cloud, choosing AWS as a cloud provider, the concept of a cloud journey, and the challenges of a monolithic architecture. We explored the requirements and architecture patterns required to migrate to AWS, with a focus on microservices. The importance of migration approaches (6R’s), the Twelve-Factor App principles, DevOps practices, and polyglot persistence were discussed. And finally with an overview of the proposed solution and the stages of migration.

I thoroughly enjoyed the course as the content was highly relevant and provided comprehensive coverage of various AWS services. The instructor, Fabrizio, demonstrated exceptional teaching skills by delivering the material concisely and effectively. The practical labs were valuable in translating theoretical knowledge into real-world applications. However, I noticed that certain documentation within the lab instructions was outdated and could benefit from a review to ensure accuracy.

Considering the course’s quality, I believe it serves as an excellent resource for individuals aiming to obtain the AWS Associate Developer certification or delve deeper into AWS serverless services. It provides a solid foundation and acts as a stepping stone towards achieving these goals.

How did you find this article? Do you have a future project that will involve embarking on a cloud journey? If so, do you plan to follow a similar approach? Get in touch with your thoughts. Additionally, if you notice any outdated information in this article, please do not hesitate to contact me.